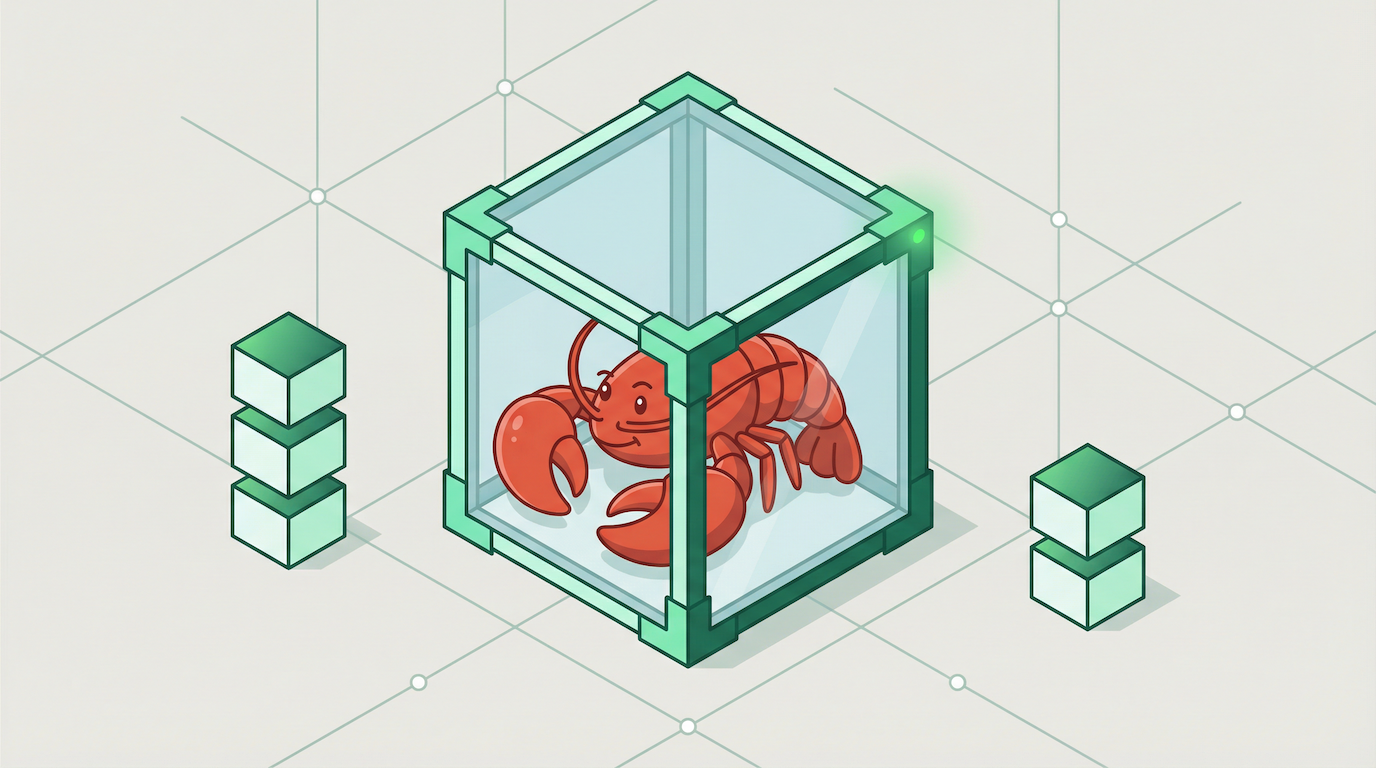

The evolution of containerization in computing has fundamentally transformed how software is developed, deployed, and managed. While early technologies like Solaris Containers and FreeBSD Jails laid the groundwork in the early 2000s, it was Docker, launched in 2013, that truly revolutionized the industry. Docker has become synonymous with containerization, serving as the de facto standard in modern software development. From small startups to large enterprises, Docker containers are now ubiquitous, enabling developers to package applications and their dependencies into portable, lightweight units that can run consistently across various environments.

However, as the software development landscape continues to evolve, so do the challenges and requirements of modern development workflows. One such challenge is the rise of large language models (LLMs) in code generation. While LLMs have the potential to accelerate development, they also introduce unique complexities and risks, particularly when integrated into containerized environments like Docker. This has led to the emergence of alternative solutions, such as Runloop Devboxes, which aim to dramatically increase the ease of deploying AI generated code and address its pitfalls while maintaining the benefits of containerization.

The Rise of Docker and the Containerization Revolution

Docker’s introduction in 2013 marked a turning point in software development. By leveraging Linux kernel features like cgroups and namespaces, Docker enabled developers to create isolated environments that could run applications consistently across different systems. This eliminated the infamous "it works on my machine" problem and streamlined the development-to-deployment pipeline. Docker’s success can be attributed to several key factors:

- Portability: Containers encapsulate an application and its dependencies, ensuring it runs the same way in development, testing, and production environments.

- Efficiency: Unlike traditional virtual machines (VMs), containers share the host system’s kernel, making them lightweight and fast to start up.

- Scalability: Docker’s ecosystem, including tools like Docker Compose and Kubernetes, enables seamless scaling and orchestration of containerized applications.

- Community and Ecosystem: Docker’s open-source nature fostered a vibrant community, leading to a rich ecosystem of tools, images, and integrations.

Despite these advantages, Docker containers are not without their limitations, especially when it comes to emerging use cases like LLM-driven code development.

The Challenges of LLM-Driven Code Development in Docker Containers

Large language models like OpenAI’s GPT and GitHub’s Copilot have become powerful tools for automating code generation. However, integrating these models into Docker-based workflows introduces several challenges, particularly in terms of security, quality, and reliability.

Security Risks

One of the most significant concerns with LLM-generated code is the potential for security vulnerabilities. While Docker containers provide a degree of isolation, they are not immune to security risks, especially when dealing with AI-generated code. Some of the key security concerns include:

- AI Models Generating Vulnerable Code: LLMs may inadvertently produce code with security flaws, such as SQL injection points, unsafe dependencies, or hardcoded credentials.

- Reproduction of Known Vulnerabilities: If an LLM is trained on public repositories, it might reproduce known vulnerabilities or deprecated practices present in the training data.

- Malicious Code Insertion: There is a risk that an LLM trained on compromised or malicious data could generate code with intentional backdoors or other harmful elements.

- Container Breakouts: While Docker containers provide isolation, they are not as secure as hardware-based virtual machines. A compromised container could potentially allow an attacker to access the host system or other containers.

Quality and Reliability Issues

Beyond security, LLM-generated code often suffers from quality and reliability issues. These problems can be exacerbated when running such code in Docker containers, where debugging and troubleshooting can be more challenging. Some common issues include:

- Lack of Error Handling: AI-generated code often lacks proper error handling and edge case coverage, leading to unexpected failures in production.

- Logical Flaws: The code may be syntactically correct but logically flawed, resulting in incorrect or inefficient implementations.

- Resource Inefficiency: LLMs may produce code that is resource-intensive or poorly optimized, leading to performance bottlenecks in containerized environments.

- verly Simplified Solutions: AI-generated code might provide quick fixes that work in simple scenarios but fail to scale or handle complex requirements.

- Incomplete Implementations: The code might appear functional at first glance but fail under specific conditions or edge cases.

These challenges highlight the need for a more robust development environment that can mitigate the risks associated with LLM-generated code while maintaining the benefits of containerization.

Runloop Devboxes: A Modern Solution for AI-Driven Development

Runloop Devboxes have emerged as a compelling alternative to traditional Docker containers, particularly for LLM-driven code development. These environments are designed to address the unique challenges posed by AI-generated code while providing a seamless and secure development experience.

Key Features of Runloop Devboxes

- Enhanced Security: Runloop Devboxes leverage hardware-based virtualization to provide stronger isolation compared to Docker containers. This reduces the risk of container breakouts and ensures that any vulnerabilities in AI-generated code are contained.

- Integrated Debugging Tools: Devboxes come equipped with advanced debugging and monitoring tools, making it easier to identify and fix issues in AI-generated code.

- Pre-Configured Environments: Runloop Devboxes offer pre-configured development environments that include all necessary dependencies, reducing the likelihood of compatibility issues.

- Scalability and Performance: These environments are optimized for performance, ensuring that resource-intensive AI-generated code runs efficiently.

- Collaboration Features: Devboxes support real-time collaboration, enabling teams to work together on AI-generated code and share insights seamlessly.

Addressing the Pitfalls of AI-Generated Code

Runloop Devboxes are specifically designed to mitigate the risks associated with LLM-driven code development. For example:

- Automated Security Scans: Devboxes can integrate with security tools to automatically scan AI-generated code for vulnerabilities before it is deployed.

- Quality Assurance Workflows: Built-in testing frameworks ensure that AI-generated code meets quality standards and handles edge cases effectively.

- Version Control Integration: Devboxes seamlessly integrate with GitHub, allowing developers to safely test the impact of AI generated code and revert to stable versions if needed.

- Customizable Templates: Developers can create blueprints for common use cases, ensuring that AI-generated code adheres to best practices and organizational standards.

By combining the benefits of containerization with easy deployment, advanced security and quality assurance features, Runloop Devboxes provide a more reliable and efficient solution for modern software development.

The Future of Containerization and AI-Driven Development

The evolution of containerization has undeniably transformed software development, with Docker leading the charge. However, as the industry continues to embrace AI-driven tools like LLMs, the limitations of traditional containerization become increasingly apparent. Runloop Devboxes represent the next step in this evolution, offering a secure, scalable, and efficient environment for developing and deploying AI-generated code.

As we look to the future, it’s clear that the integration of AI and containerization will play a pivotal role in shaping the software development landscape. By adopting innovative solutions like Runloop Devboxes, developers can harness the power of AI while mitigating its risks, ensuring that the code they produce is not only fast and efficient but also secure and reliable. The journey of containerization is far from over, and the best is yet to come.