The rise of Large Language Models (LLMs) has redefined how code is written, optimized, and integrated into software development workflows. Tools like Cursor and GitHub Copilot have brought AI-generated code to the forefront, accelerating development but also introducing new challenges in ensuring code quality. As this technology evolves, so does the need for robust methods to assess its outputs. This is where evaluation and benchmarking come into play—two terms often used interchangeably, yet fundamentally distinct in their purpose and application.

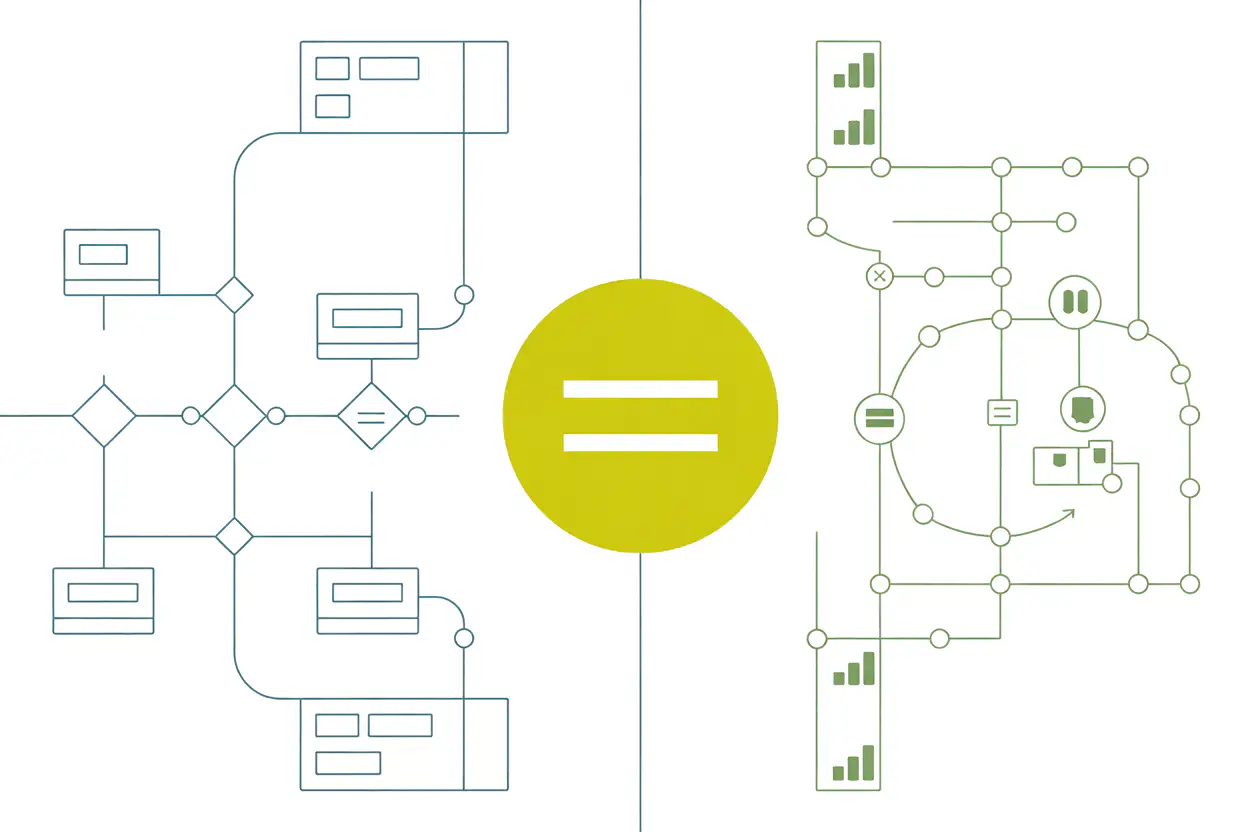

Understanding the differences between evaluation and benchmarking is essential for developers, ML engineers, and organizations aiming to integrate AI-generated code into their production pipelines. The table below gives an overview of the key differences.

| Aspect | Benchmarking | Evaluation |

| Purpose | Standardized comparison using predefined tasks and data | Comprehensive assessment in real-world scenarios |

| Focus | Metrics like accuracy, speed, syntactic correctness | Code quality, maintainability, performance, security |

| Examples | HumanEval, MBPP, SWE-Bench | Custom tests, security audits, performance under load |

| Strengths | Objective model comparison, reproducibility, progress tracking | Identifies practical issues, ensures production readiness |

| Limitations | Needs ideal conditions, risk of overfitting to tests, lacks context nuance | Subjective, resource-intensive, harder to standardize |

| Output | Quantitative scores | Qualitative and quantitative insights |

| Context Sensitivity | Low-general tasks across models | High-specific to deployment environments and use cases |

| Standardization | High-uniform datasets and metrics | Low-varied based on project requirements |

| Use Case | Comparing model versions or different models | Assessing deployment readiness and ongoing performance |

Case Study: Runloop DevBoxes and AI Code Quality

Runloop’s DevBoxes exemplify how evaluation and benchmarking intersect in modern development environments. DevBoxes are purpose-built to address the unique challenges of AI-assisted development, providing tools that extend beyond traditional benchmarks.

- Benchmark Integration: DevBoxes support benchmarks like HumanEval and SWE-Bench to assess model performance on standardized tasks.

- Comprehensive Evaluation Tools:

- Security Scans: Automated tools that detect vulnerabilities in AI-generated code.

- Performance Monitoring: Tools to evaluate efficiency and resource use under real-world conditions.

- Version Control for AI Artifacts: Manages prompts, datasets, and AI outputs for reproducibility and auditability.

- Collaboration Features: Facilitates team efforts in refining AI-generated code.

By combining benchmarking rigor with comprehensive evaluation, Runloop’s DevBoxes help developers maximize AI potential while ensuring code quality and security.

Best Practices for Evaluating and Benchmarking AI-Generated Code

Start with benchmarks to gain baseline insights into your AI model's capabilities. Standardized benchmarks like HumanEval or MBPP can provide a quick snapshot of performance on predefined tasks. However, it’s crucial to follow this with real-world evaluations to ensure the code performs well in practical applications.

Implement multi-dimensional evaluation by assessing AI-generated code across various criteria such as correctness, security, performance, and maintainability. Automated tools can handle initial checks, but human review remains vital for aspects like code readability, ethical implications, and handling complex edge cases.

Continuously monitor and iterate on both benchmarks and evaluations. AI models evolve rapidly, and regular updates to your assessment processes help maintain relevance and accuracy in performance metrics and real-world functionality.

Prioritize security and compliance by integrating security checks into both benchmarking and evaluation workflows. This ensures that AI-generated code not only meets functional requirements but also adheres to industry standards and organizational policies.

Leverage collaborative tools to enhance teamwork between human developers and AI systems. Use version control and audit trails to maintain transparency and accountability, allowing teams to track changes, share insights, and refine code more effectively.

A Balanced Approach for AI-Generated Code

As AI continues to reshape software development, robust assessment methods become critical. Benchmarking offers standardized metrics for model comparison, while evaluation ensures AI-generated code meets practical deployment standards.

Runloop’s DevBoxes integrate both approaches, helping developers navigate AI-assisted coding complexities. Understanding and applying the differences between benchmarking and evaluation is essential for maintaining high software development standards.

Mastering both processes isn’t just beneficial—it’s critical for success in the evolving landscape of AI-generated code.